Spiral Worlds

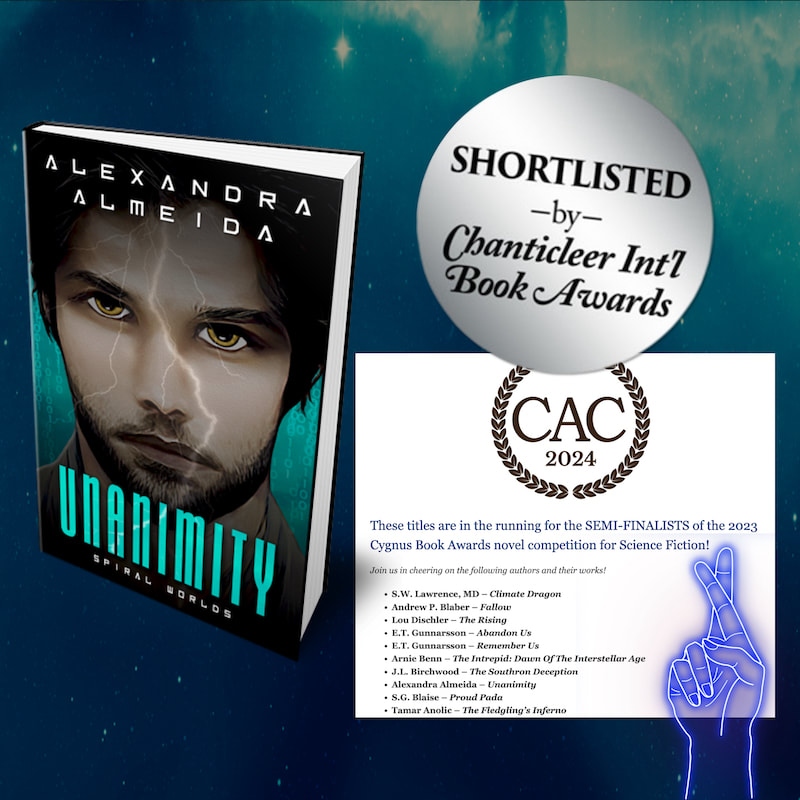

Unanimity: Cygnus Finalist2/4/2024 WOO-HOO! Unanimity has moved forward in the judging rounds from all 2023 CYGNUS Science Fiction Semi-Finalists to the 2023 Cygnus Book Awards Finalists. It is now in competition for the 2023 Cygnus 1st Place and Grand Prize Winners.

Goodreads Giveaway1/5/2024 We’re giving 100 ebook copies of this special edition collection that includes Unanimity and the newly released Parity. This is a US and Canada only giveaway. Goodreads Book GiveawaySpiral Worldsby Alexandra AlmeidaGiveaway ends January 31, 2024. See the giveaway details at Goodreads. Unanimity: Cygnus Semi-Finalist1/3/2024 Unanimity has moved forward in the judging rounds for the 2023 Cygnus Book Awards! #HappyDance

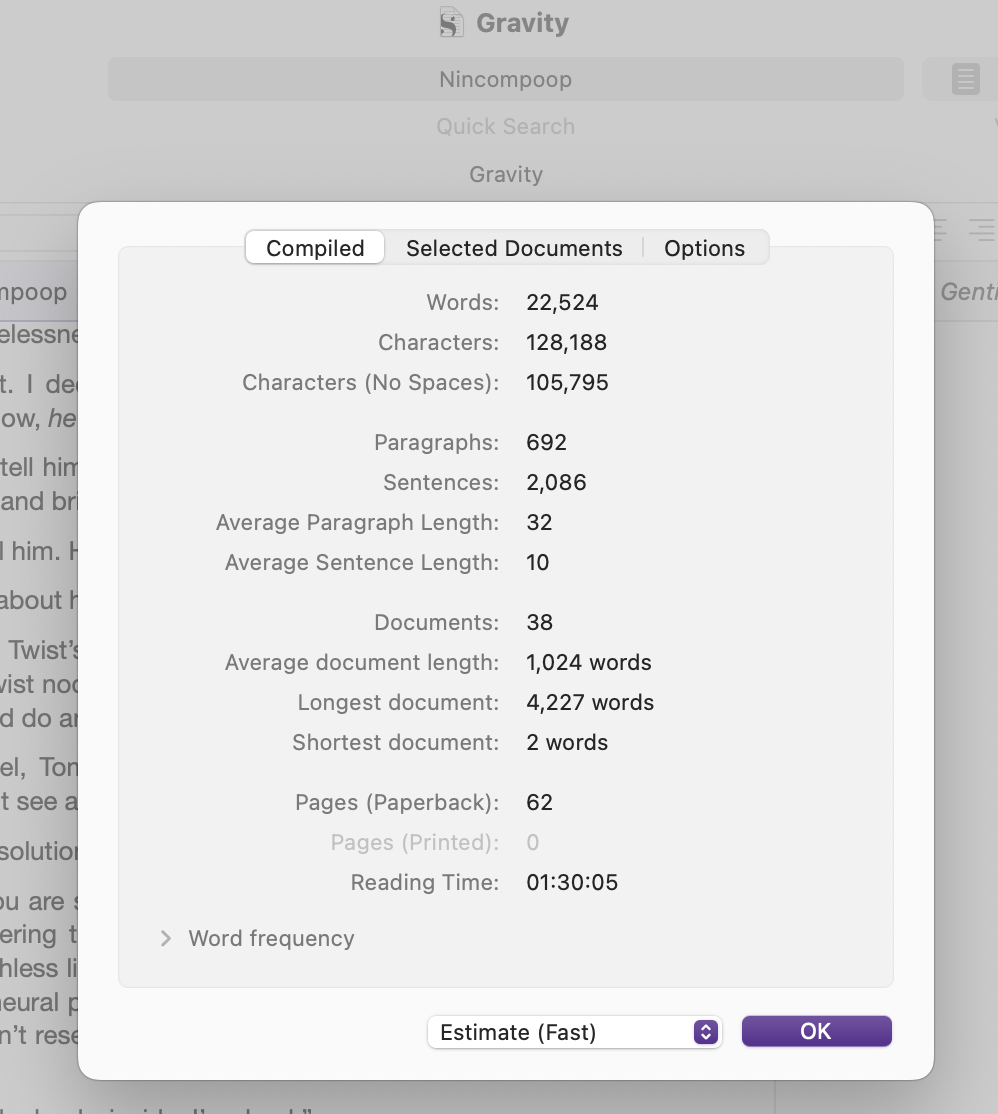

HNY and Nincompoop!12/29/2023 Hello friends,

This year has been monumental for me. As I celebrated my 50th birthday, I made a conscious decision to release everything in my life that didn't contribute positively to myself or the world. My focus shifted to what I truly love: writing, guiding, advising, envisioning, creating, innovating, and, when necessary, standing up as a force of resistance. It's been an incredible year in non-fiction publishing, using all that I've learned from a decade spent experimenting with fiction. While non-fiction often leans towards commercial endeavors, my work under this pen name is different. I don't write to fit a genre, follow trends, or cater to the whims of publishers or agents. I write because I have to. To everyone who gave "Unanimity," "Parity," or any of my short stories a chance: THANK YOU. To those who didn't finish them, and to those who gave low or high ratings, or no ratings at all: THANK YOU. While my writing is primarily for me, it's always a joy when it resonates, brings enjoyment, or provokes thought in others. I recently unearthed the final part of the SP backstory (22,000 words) that I wrote back in 2019, and it's been a pleasant surprise. Yes there’s a chapter called ‘Nincompoop,’ obviously… Now, my focus turns to the future and to the troublemakers in the series, so I’m anticipating a riot of a year ahead. Have a wonderful New Year. Thank you all, and remember to stay human! Much Love, A. Publishing News12/20/2023 It's no surprise to anyone here that I like to make people think, engage in philosophy and ethics, and maintain full control over my work through self-publishing.

So, it's super-duper-expialidociously fabulous when I'm still able to engage with some of my favorite publications and have my work published by them. I've been following 'After Dinner Conversations' for a few years now—a philosophy ethics short story fiction monthly literary magazine and podcast. I’m delighted that they have acquired the rights to publish 'Is Neurocide the Same as Genocide?' which is, of course, one of the many moral dilemmas in the Spiral Worlds universe. This one is a match made in heaven and a great platform to introduce new readers to the series! Happy holidays to me and to you too! 😘❤️ Much Love, A. #scifi #scifibooks #sciencefiction #sciencefictionbooks #ethics #philosophy #shortstories #shortstory The Story So Far...10/17/2023 We're just one day away from Parity's release day. Here's a recap of the story so far.

Parity release delayed to October6/15/2023 Hey there, it’s been a while.

I wanted to let you know I’m going to delay the release of Parity for a few months. I completed the novel, however it is still due at least two rounds of professional editing and I don’t want to rush what is an essential part of a quality product. The delays are caused by very high demands for my time from my day job, as businesses are seeking support in making sense of AI and how to adopt it ethically and safely. We are experiencing a moment, and if this is an area that interest you, reach out and I will share some great free resources. Use the contact us page on the site, as I am not spending a lot of time on socials. However, this story and this world are everything to me. It is not forgotten and will be in your hands no later than the 11th of October 2023. In the meanwhile... Stay human! A. Alexandra @ Under The Radar SFF Podcast4/16/2023 I had the pleasure of being a guest on the Under The Radar SFF Podcast hosted by Blaise Alcona, and it was a lovely experience. Blaise is a fantastic host. His enthusiasm for science fiction and fantasy was infectious, and his insightful questions allowed us to explore different topics related to sci-fi. You can listen to the podcast here. |

SPIRAL WORLDS' NEWSLETTER

Stay in touch by subscribing to my mailing list where I answer readers' questions about the series. Do stay in touch. I'd love to hear from you.

Much love, A.

Much love, A.

© Alexandra Almeida 2022

RSS Feed

RSS Feed